Home / Blog / Insights / Building a Secure Foundation for AI Adoption: Cybersecurity Prerequisites

•

Building a Secure Foundation for AI Adoption: Cybersecurity Prerequisites

Artificial intelligence (AI) tools like Microsoft Copilot are changing how businesses operate, promising increased efficiency, better insights, and streamlined workflows. However, as exciting as these innovations are, they come with a double-edged sword: the potential for cybersecurity risks.

In this blog, we’ll explore how organizations can balance AI and cybersecurity, starting with practical steps for creating a safe environment for AI adoption. From understanding the role of data governance to setting up secure “clean rooms” for AI tools, we’ll equip you with the essentials to adopt AI confidently and responsibly, diving into:

- How has generative AI affected security?

- Data security management for AI adoption

- A deep dive into data governance and clean rooms

Let’s get into how you can build a secure foundation for AI adoption while safeguarding your data and maintaining compliance.

How Has Generative AI Affected Security?

Adopting AI tools like Microsoft Copilot opens the door to promising possibilities, but it also introduces unique challenges that your business should address upfront. AI systems thrive on data—sometimes sensitive, outdated, or even irrelevant—and the way they process this information can introduce risks if not managed carefully.

One major concern is unauthorized access to sensitive data. For example, it’s entirely possible for AI to inadvertently pull confidential information, such as personnel records, financial details, or proprietary business data, into its analysis. Without proper safeguards, this information could be exposed to the wrong people, leading to privacy violations.

This could be especially damaging in the case of malicious misuse. Picture this: a disgruntled employee could manipulate AI to uncover internal salary information or confidential operational details. When misused, these tools simply amplify any existing security gaps, leaving you open to risk.

Another emerging threat is the use of generative AI for phishing attacks, much like these real-life examples. Cybercriminals can leverage AI tools, like ChatGPT, to craft highly convincing and personalized phishing emails that are difficult to distinguish from legitimate ones. For instance, an attacker might generate an email that appears to be from a trusted colleague or company executive, asking the recipient to click on a malicious link or provide sensitive information.

In some cases, attackers have even used deepfake technology to escalate their schemes. Take for example this incident, where a CEO was tricked into transferring large sums of money after receiving audio messages that appeared to come from a trusted individual, but were actually the result of a sophisticated deepfake.

Finally, compliance challenges. Using AI tools without a clear framework can result in unintentional breaches of regulations, like Canada’s PIPEDA or GDPR. If AI unintentionally surfaces or shares personal information that should have remained protected, like an employee’s SIN number or medical records, it could put your company at legal or regulatory risk.

To put it simply, AI has the potential to unlock value—but only if its implementation is supported by proactive security measures. Addressing these challenges starts with understanding those prerequisites.

Data Security Management for AI Adoption

While adopting AI in your organization is exciting, it’s not as simple as flipping a switch. Here are some key actions to take before you roll out the red carpet for the latest and greatest AI programs:

1) Adopt a Data Governance Framework

To adopt AI effectively, you need a strong handle on your data quality and security. Begin by organizing your data: classify and label it to identify what is public, sensitive, or restricted. This clarity ensures that the most critical data gets the protection it needs. Then, implement a system for routinely archiving or deleting outdated and irrelevant information. Keeping your data current and relevant prevents AI tools from producing false or misleading outputs based on obsolete data.

2) Implement Access Controls

When you turn on an AI like Microsoft Copilot within your organization’s environment, it’s almost like creating a search engine for your business data. That means that without the right access controls in place, sensitive data could become available to anyone in your organization.

Implementing access controls ensures that only authorized individuals can interact with that information. Role-based access can prevent anyone from entering a search query and seeing something they shouldn’t see. For example, senior leadership might have broader access to corporate financial information, while more junior employees are restricted from that type of information.

3) Invest in Data Loss Prevention

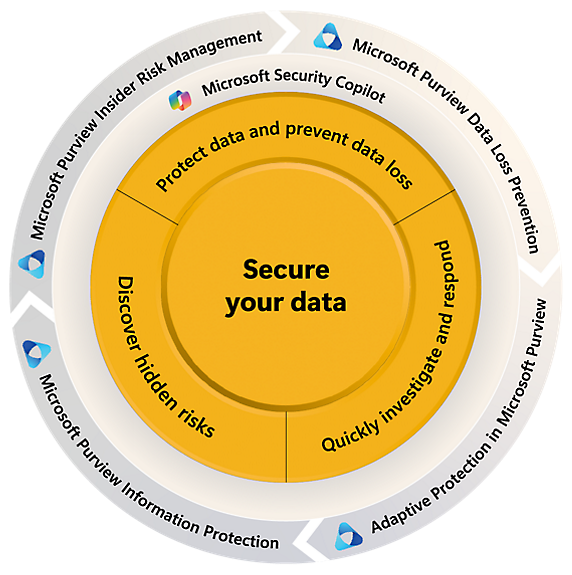

While data governance focuses on creating policies and frameworks for managing data, Data Loss Prevention (DLP) takes a more active role in enforcing those policies. DLP tools work in real-time to monitor and prevent the unauthorized sharing or misuse of sensitive information, adding an essential layer of protection to your data governance strategy.

Tools like Microsoft Purview help enforce DLP rules by detecting and blocking attempts to share confidential information like credit card numbers or personal identifiers.

4) Strengthen Endpoint and Network Security

AI tools rely on multiple devices and networks, which makes them attractive targets for cyber threats. Protect your endpoints—like laptops, tablets, and smartphones—by using firewalls, encryption, and keeping software up to date. On the network side, use tools like VPNs and intrusion detection systems to secure your infrastructure. You can think of this like building a digital moat around your AI systems to keep risks at bay.

To mitigate, use tools for endpoint protection and intrusion detection systems to secure your infrastructure. You can think of this like building a digital moat around your AI systems to keep risks at bay.

5) Train Your Team to Use AI Safely

Your employees are your strongest defense against AI-related risks—but only when they’re prepared. Offer regular training to help them recognize red flags, like sharing sensitive data in public AI tools like Chat GPT or misinterpreting AI-generated outputs. The goal is to teach your team to use AI responsibly and avoid mistakes that could jeopardize your organization’s security.

6) Create an AI Policy That Works

Set clear guidelines for how AI tools can (and can’t) be used within your organization. Spell out what data is off-limits, how to validate AI outputs, and the steps to ensure compliance with laws like GDPR or PIPEDA. A solid policy gives your team the structure they need to use AI confidently while keeping your organization on the right side of the law. Remember to implement regular reminders and training sessions with your team, because enforcing your policy takes more than a one-and-done approach.

7) Assess Risks and Test Your AI Regularly

Before rolling out AI tools, take the time to evaluate your systems for potential vulnerabilities. Run regular tests to uncover weak spots and make adjustments as needed. But don’t stop there—keep testing AI outputs to make sure they’re accurate, secure, and free from bias. Plan regular tune-ups to keep your AI running smoothly and safely.

By addressing these prerequisites, you can create a secure, well-managed environment that allows AI to drive innovation while keeping your data and systems safe.

Deep Dive: Data Governance and Clean Rooms

We’ve covered the many prerequisites that come with AI adoption, but there’s one core concept that can help you roll out AI strategically. It’s called a “clean room.”

A data clean room is like a secure, private workspace where your team can safely work with sensitive data for AI projects. It’s designed to make sure only the right people and systems can access the information they actually need—nothing more, nothing less. Think of it as a highly controlled environment that keeps your data safe while your organization experiments with AI.

Here’s what makes a clean room great for AI adoption:

- One copy of data: There’s only one version of the data, so you don’t have to worry about duplicates floating around or outdated information being used.

- Tight access controls: Only those who absolutely need the data for their work can get to it, cutting down on unnecessary exposure.

- Governance and monitoring: Regular audits, archiving old data, and keeping an eye on who’s accessing what ensures everything stays secure and compliant.

In short, a clean room gives you a solid foundation for adopting AI securely. It makes sure your data stays safe and your AI delivers accurate, reliable results—without any unpleasant surprises along the way.

Setting Up a Clean Room for AI Experimentation

Think of a clean room as your digital sandbox—an isolated environment designed to maintain strict data access controls while you experiment with innovation. If you want to keep the clean room, well… clean, then you’ll need to plan carefully—but the payoff is a safe, controlled environment to play in. Here’s how to get started:

- Keep AI Experimentation Separate — Create a dedicated space for AI projects that’s completely isolated from your live systems. This avoids accidental disruptions and makes sure your sensitive data stays protected.

- Limit Access to the Essentials — Use tools like Microsoft Purview to enforce strict, role-based access. Only those directly involved in the project should have access to specific data—and even then, only to what they truly need.

- Define Clear Data Rules — Establish protocols for how data is handled within the clean room, from removing outdated information to archiving project data securely once the work is done.

- Add Layers of Protection — Implement encryption and conditional access policies to safeguard your data at all times, whether it’s being stored or shared. These measures ensure your information remains secure even if access is attempted by unauthorized users.

- Monitor and Respond Proactively — Leverage AI-specific tools, like Microsoft’s advanced threat protection, to monitor activity and flag unusual behavior. For example, unauthorized attempts to share data can be blocked automatically, with alerts sent to your team.

If this feels overwhelming or you’re unsure where to begin, the team at Convverge is here to help you set up these environments and guide you through the process.

Are You AI Ready?

Adopting AI tools like Microsoft Copilot doesn’t have to be risky. At Convverge, we specialize in helping businesses like yours innovate safely. From assessing your data environment for AI readiness to setting up secure clean rooms and training your team on responsible AI usage, we provide the expertise you need to succeed. Plus, with ongoing cybersecurity support, we can help ensure your systems stay protected as the technology evolves.

Ready to take the next step? Contact us today to learn how we can help you build a secure, AI-supported business.